STEM EXPERT SPOTLIGHT

Matthew D. Lew, PhD, is an assistant professor of electrical and systems engineering at Washington University in St. Louis. He earned a PhD in Electrical Engineering from Stanford University in 2015 and started his lab at Washington University shortly thereafter. His lab specializes in developing computational super-resolution microscopes to sense the movements of and interactions between molecules in chemical and biological systems. He teaches undergraduate and graduate courses on applied linear algebra and modern optical imaging. Over the past 5 years, he has partnered with the Saint Louis Science Center to engage Washington University students in Portal to the Public, a workshop series educating scientists on how to communicate their work in an informal learning environment.

Photo Credit: Whitney Curtis

Imagine everything that happens when you snap a photo on your smartphone. A “shutter” opens within the sensor of your phone (most shutters are electronic, not mechanical these days). Millions or even billions of light particles, called photons, excite electrons within the sensor to generate current that is converted into digital ones and zeros—bits—that are the digital facsimile of your photo. However, the picture isn’t perfect; the lighting could be too bright in the background, the phone’s flash may make skin tones too blue, and the sensor itself might add blocky noise to your perfect composition. Your phone’s software magically corrects all of these blemishes, and voila, you have a beautiful masterpiece displayed on your screen.

Imaging scientists study both the physics of how images are captured—how does light interact with cells under a microscope, or how does neural activity in the brain generate magnetic fields?—in addition to the mathematics and statistics of how we can perform real world tasks using those images—is there a tumor in this image, or how far is the pedestrian from my car? Throughout history, imaging scientists focused on improving physical instruments, creating lenses with fewer aberrations or electromagnets capable of generating stronger magnetic fields, for example, but after the dawn of the digital revolution imaging scientists began pursuing a revolutionary idea: could a physical instrument and a computer work together, rather than one after the other, to produce more useful images? The modern form of computational imaging was born. The prospects of computational imaging are tantalizing; it enables humanity to study objects and processes that cannot be seen without its unique blending of physics, mathematics and computation.

Scientists and engineers have worked for centuries to build microscopes to see the tiny molecular machines at work in biology—the DNA, proteins and other biomolecules that enable life. Studies of these machines have been held back by a centuries-old belief that one could never achieve a resolution better than half the wavelength of light, or approximately 250 nanometers (nm)—one hundred times the size of a protein. Three scientists, Eric Betzig, Stefan W. Hell and William E. “W. E.” Moerner, were awarded the Nobel Prize in Chemistry in 2014 for the development of super-resolved fluorescence microscopy. These technologies break the so-called “diffraction barrier” of light microscopy and produce images with details finer than 250 nm in size, but not by building bigger and better lenses.

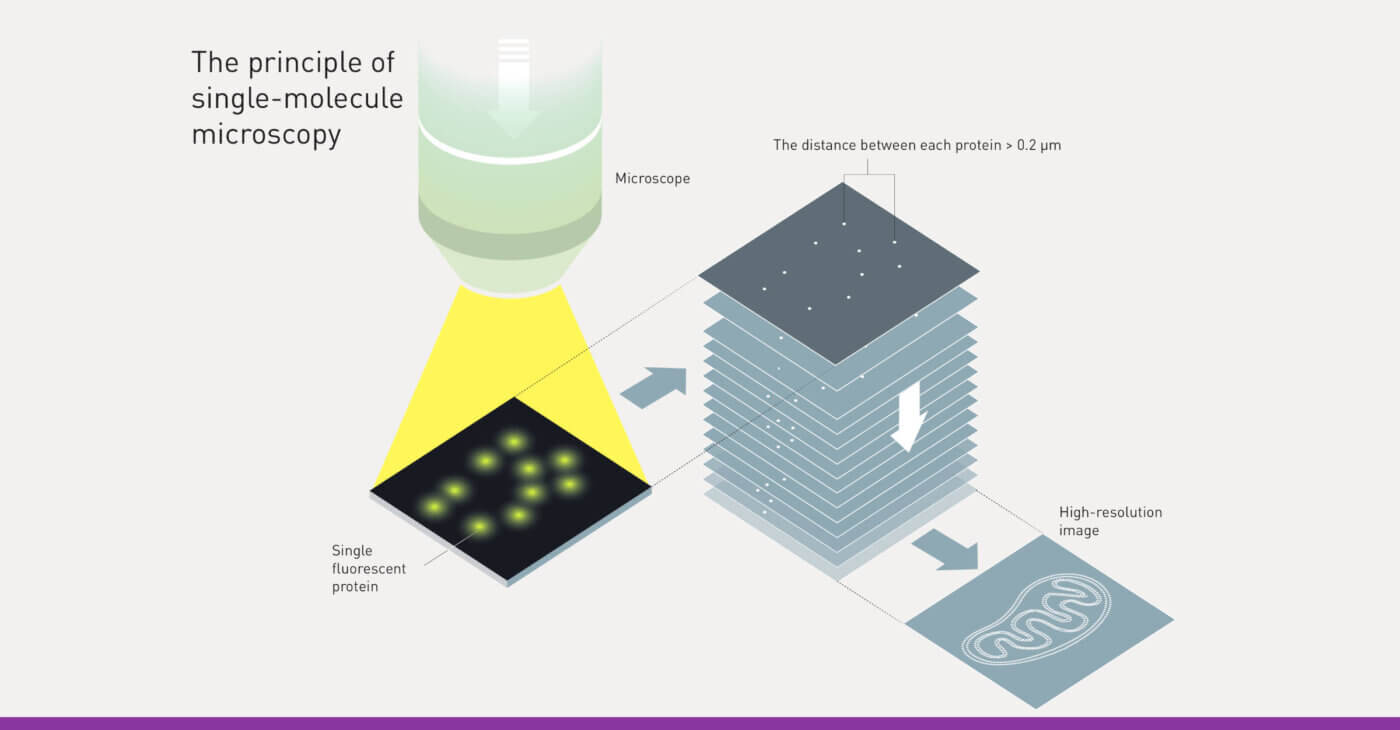

Instead, one flavor of super-resolved microscopes, called single-molecule localization microscopes (SMLM), uses single molecules and two big ideas in a surprising yet simple way. Step “zero” begins with detecting specific target molecules one at a time in a cell—no easy feat since there are an Avogadro’s number of molecules—1023—present.

Illustration: © Johan Jarnestad/The Royal Swedish Academy of Sciences

Illustration: © Johan Jarnestad/The Royal Swedish Academy of Sciences

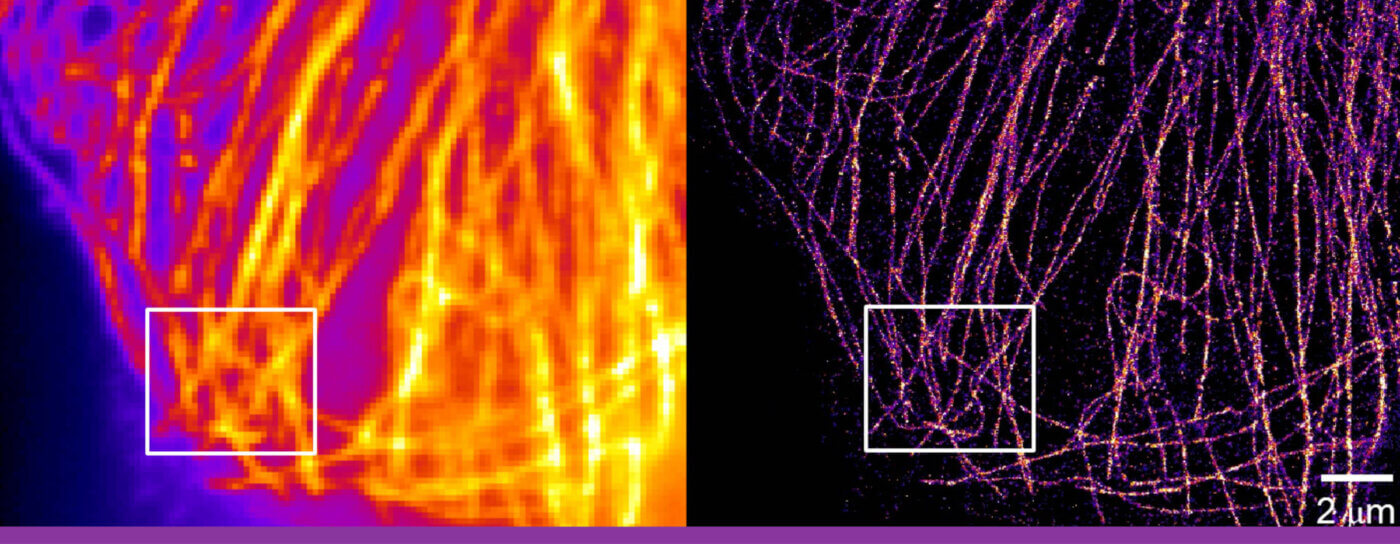

Microtubules in a fixed BSC-1 cell. On the left, the diffraction-limited, and on the right a super-resolution image.

Microtubules in a fixed BSC-1 cell. On the left, the diffraction-limited, and on the right a super-resolution image.

Photo Credit: WeoMoe, Wikimedia Commons

Next, scientists need to make molecules blink, like lights on a Christmas tree. If all the lights—the fluorescent molecules—emit at the same time, the optical microscope overlaps their images together so that the image of the structure is blurry. To instead make the molecules blink “on” and “off” over time, scientists can shine special colors of light on the molecules or add chemicals (oxidizing and reducing agents, for example). Once the molecules are blinking, the computational imaging process begins. Special algorithms perform a process called “localization” where the position of each molecule within each image is computed using “Regularized Maximum Likelihood” (RML) methods—flexible algorithms using established physics and mathematics along with scientifically reasonable “guiderails”—to construct the image. Importantly, the uncertainty of knowing the location of each molecule is much smaller than the size of the image of the molecule on the camera—just like how GPS’s uncertainty locating your position at the top of a mountain is a few meters, which is much smaller than the width of the mountain. A final image of the biological structure is reconstructed point-by-point, or molecule-by-molecule, just like a pointillist painter creates a masterpiece. Surprisingly, the resolution of this masterpiece is orders of magnitude better than those of standard microscopes, approaching 1 nm or the size of a single molecule.

The future of computational imaging is bright; the McKelvey School of Engineering at WashU launched an interdisciplinary PhD program in Imaging Science in 2018. Computational imaging (specifically engineering how the scene is illuminated and how the resulting images are analyzed) are at the heart of innovative consumer technologies like Microsoft Kinect and Apple’s Face ID. Computational imaging is also improving the capabilities of medical imaging, enabling MRI, SPECT and CT scans to create higher resolution images with greater sensitivity more quickly. Finally, in the Lew Lab at Washington University, students are creating new optical microscopes that bend light so that scientists can study both where molecules are and how they are oriented or organized within biological samples. These new capabilities aim to reveal the hidden links between how biological molecules are organized and tough-to-fight neurodegenerative diseases like Alzheimer’s and Parkinson’s.

While it’s difficult to predict what new innovations are around the corner, one thing is clear. Imaging scientists are demonstrating the age-old adage again and again: seeing is believing.

Illustration: © Johan Jarnestad/The Royal Swedish Academy of Sciences

Illustration: © Johan Jarnestad/The Royal Swedish Academy of Sciences