SharpShooter UAS platform Image credit: Siddharth Shekar

SharpShooter UAS platform Image credit: Siddharth Shekar

DRONES:

Workhorses of the Future

DR. SRIKANTH GURURAJAN

Dr. Srikanth Gururajan is an Associate Professor of Aerospace Engineering at Saint Louis University. He leads the Aircraft Computational and Resource Aware Fault Tolerance (AirCRAFT) Lab where his team of graduate and undergraduate students work on novel drone designs, fabricating, testing and using artificial intelligence & machine learning to teach and train them to behave well and be good drones, all in the service of humanity. He lives in St. Louis County with his family and pup, Biscuit.

It’s the morning of your birthday, and a message on your phone alerts you to a delivery on your porch. You open the door expecting to see your friendly delivery driver, but instead you hear the hum of a delivery drone hovering above your front yard, gently winching down a box with your gift!

This perhaps might have been fiction just a few years back, but given the pace of innovations in the drone or Unmanned Aerial System (UAS) field, an elementary school student today could realistically expect this to be a norm by the time they enter college. It’s just one of many scenarios where drones are expected to be used extensively soon.

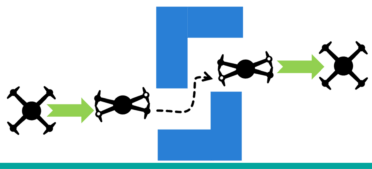

Researchers all over the world are developing, testing and validating the underlying technology to enable safe, widespread use of these UAS for many tasks, from monitoring crops, wildfires and floods to assisting with search and rescue.

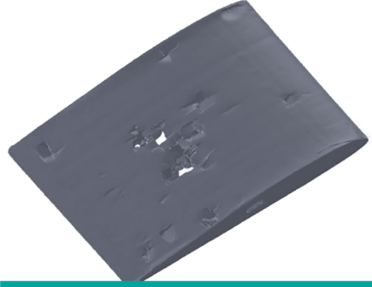

At Saint Louis University’s Aircraft Computational and Resource Aware Fault Tolerance (AirCRAFT) Lab, a team of researchers is expanding the envelope of drone applications and safety through several novel projects. At the AirCRAFT Lab—whose motto is “The Future is Autonomous”—the team is working to bring that future closer by developing technology to train, teach and enable drones to be the workhorses of the future. Imagine an autonomous drone capable of responding to your commands or gestures to pick up your groceries, or watch over your yard (or your pup—”Biscuit, leave it!”), check on your prized pear tree or survey your roof after a storm… The AirCRAFT Lab is working to make these potential scenarios a reality.

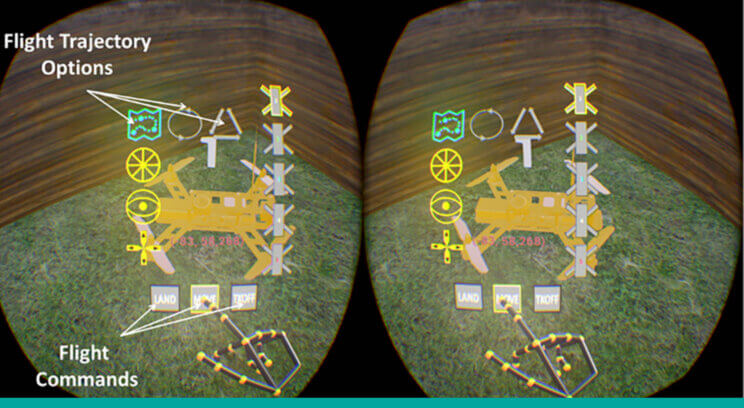

In one of the lab’s projects, a Virtual Reality (VR) environment is being developed to enable a user to command and control drones from remote locations. Currently, UAS operation is limited to those highly skilled in manipulating flight controls, developed over many flight hours. The AirCRAFT Lab’s work integrating virtual reality controls would allow an entry-level user to easily operate a UAS—much like playing a video game.

While a multirotor (a quadcopter being the most common) is what comes to mind to many when “drones” are discussed, the term UAS covers fixed-wing uncrewed aircraft as well—similar to aircraft models that many people grew up flying in RC flight fields. In the future, it is reasonable to expect that larger fixed-wing UAS will be used in long-distance transport, or long endurance monitoring flights, when approved by the Federal Aviation Administration (FAA).

In the future, it is conceivable to see drone usage being ubiquitous in everyday life—smart drones equipped with high levels of autonomy powered by AI/ML, capable of recognizing input, whether hand gestures or verbal commands, from a user with a basic skill level. Ahead, there is a world where farmers utilize a VR interface from their home office to command drones to survey their fields; where a first responder can deploy a drone to a disaster area using simple hand gestures and voice commands; or where instate commerce and delivery are executed by unmanned aircraft, aware of their environment and able to adapt in severe weather, to complete a mission.

The possibilities that these drones of the future bring are almost limitless, and at Saint Louis University’s AirCRAFT Lab researchers are exploring them with a combination of cutting-edge drone technology and Artificial Intelligence, powered by the belief that “The Future is Autonomous.”